Is it really true or did you read it online?

Published:

The following article is an overview of my understanding of the Lesson 1: Disinformation taught by Rachel Thomas. It also represents my notes for the course and also an attempt at a blog post series to increase awareness about various concepts in fairness and ethics.

What is Disinformation?

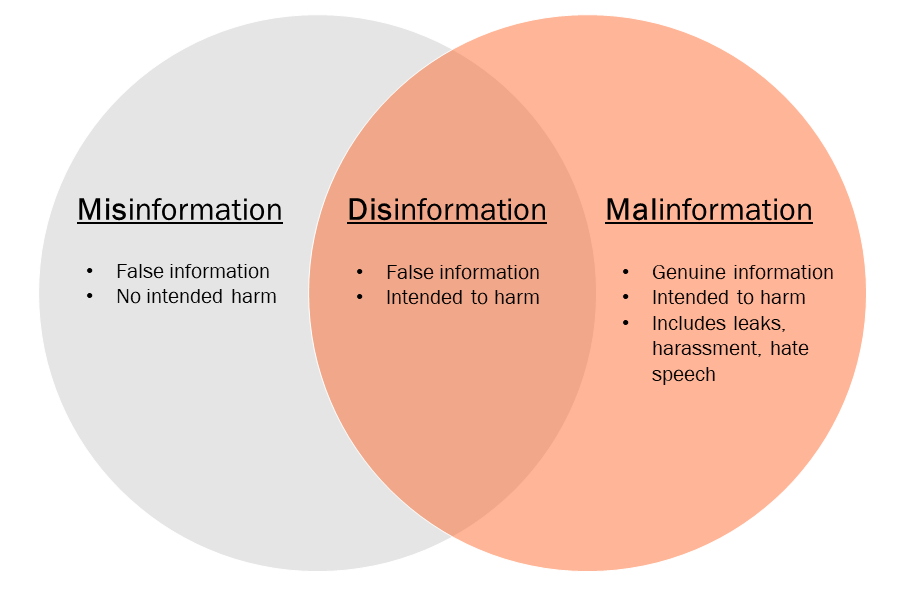

Often, we come across various articles, memes or any other content while browsing social media that we consider as “fake” or “misleading”. These articles could’ve either purposely been posted on the platform to mislead users or it could’ve been a genuine mistake by the poster. This results in untrue information being forwarded to the audience either purposely or as an accident. Thus in such cases, there could be 3 major forms of information which can be observed from the following figure.

Thus, we can observe that the most dangerous kind of information to receive from such platforms or any source for that matter is disinformation as it is intended to harm the receiver deliberately.

However, the next point to observed is if this harm is intended by the algorithm processing the information or by the poster of the information. Intuitively, we have been wired to believe that algorithms are the ones who misinterpret everything and cause the spread of disinformation. This is not a general case but has been proven as the truth in many cases.

Case Studies on Misleading Information

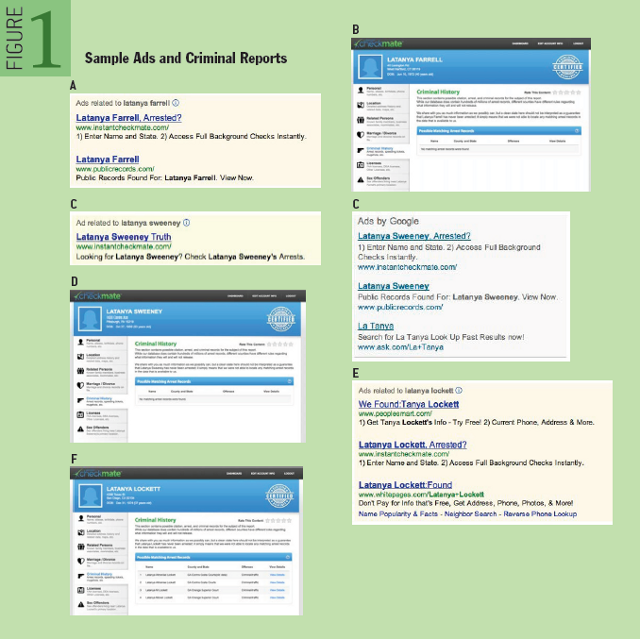

1. Latanya Sweeney Ad Discrimination

The first example is about the bias generated by digital ads while looking for various people on search engines. This was observed by Dr.Latanya Sweeney who is a professor at Harvard. While looking for her name on a search result, she found ambiguous ads such as the ones stating “Latanya Sweeney, arrested” and then she decided to investigate these search results. After conducting an experiment involving around 2000 variations of various names, she concluded that the search engine ads were biased towards a certain community of people and would show such results in order to increase the click rate. This was also properly summarised in the paper written here. An example of comparing such results can be observed below:

Thus, a clear bias can be observed by the search results and the resulting ads. However, on asking the ad posters regarding the same, a claim was made that for every ad, both copies of the results as observed in the figure were submitted. On observing various ad clicks, the algorithm learnt that African-American names should be assigned one of the two submitted variations and similarly for all other names, a bias was introduced. Thus, in this case, the algorithm was at fault. This could be borderline classified as ‘misinformation’ on the human side.

2. Houston Protest Trollification

On 21st May 2017, two non-violent groups of protestors gathered at the Islamic Da’wah Center in Houston. One of these groups was holding the signs stating that “White Lives Matter”. This group was led to believe that the new library in the religious center was publicly funded and thus, this group came to protest the opening for the same. The other group of protestors showed signs in support of the opening stating slogans like “Muslims are welcome here”. Even though, the protest wasn’t violent, there was high tension among the people present.

However the twist to this story was that the real instigators of this protest weren’t even present in the country at that time. They were sitting at their homes in St.Petersburg, Russia. It was also found that the coordinators of these two Facebook events were two seemingly polarized Facebook groups — one called the “Heart of Texas” with 250,000 likes, and the other titled “United Muslims of America” with 320,000 likes. But a federal investigation into Russia’s influence on the 2016 election had linked both accounts to the same St. Petersburg troll hub.

Thus, this is the case where people spread a form of disinformation in order to create chaos and harm 2 distinct groups of people. This is a major problem that has to be dealt with the increase in the usage of these social networks.

More on Disinformation

After looking at various such examples, it can be said that disinformation isn’t just about spreading fake news. It also includes memes, videos, social media posts. All of this is spread in the form of rumors, hoaxes, propaganda, misleading content, misleading context. It should be noted that it is not wise to use the terms “fake news” and “disinformation” interchangeably. As we observed with the case studies too, disinformation leads to misleading content majorly and not necessarily only fake content. Thus one should be careful while referring to disinformation.

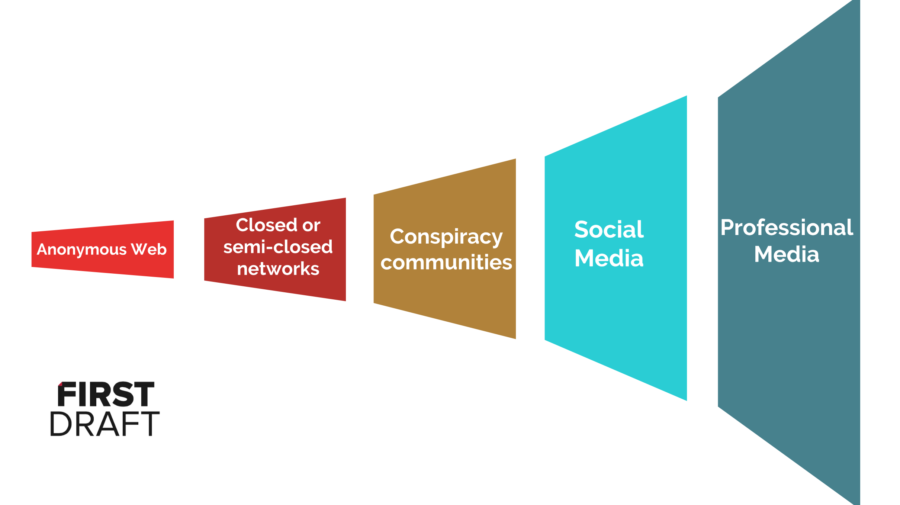

Disinformation also doesn’t just necessarily refer to one single content entity. It could be a whole campaign of planned manipulation of an entire community. There is a general framework to how this manipulation is carried out and how it reaches the masses. This framework proposed by Dr. Claire Wardle was called “Trumpet of Amplification” and can be represented as:

This trumpet represents how any misleading information starts with anonymous websites such as 4chan or 8chan. After this the information is passed on and starts doing rounds on social media networks such as Whatsapp, Telegram or Facebook Messenger groups. After gaining some traction, it is posted and shared on various conspiracy groups on Reddit and even YouTube videos are made on the same. After this, the influential social media handles start covering these misleading information tracks and thus, eventually it results in being covered by social media. Due to such widespread of this misleading information already, the end stages of the trumpet aren’t able to verify completely the authenticity of the information at times. Such frameworks often undermine democracy.

The Issue of Verification

There is also a very good piece in a blog post written by Zeynep Tufekci. This piece is regarding the verification of facts and the threat of surveillance arising out of it and was written as:

When people argue against verification efforts, they often raise the issue of authoritarian regimes surveilling dissidents. There’s good reason for that concern, but dissidents probably need verification more than anyone else. Indeed, when I talk to dissidents around the world, they rarely ask me how they can post information anonymously, but do often ask me how to authenticate the information they post—“yes, the picture was taken at this place and on this date by me.” When it’s impossible to distinguish facts from fraud, actual facts lose their power. Dissidents can end up putting their lives on the line to post a picture documenting wrongdoing only to be faced with an endless stream of deliberately misleading claims: that the picture was taken 10 years ago, that it’s from somewhere else, that it’s been doctored.

Hack, Leak, Authenticate!

An information dump or leak is generally when a load of documents are leaked online. One such example of the information dump is the “Hilary Clinton email leak” which came into light during the 2016 U.S. Presidential Elections.

Often in such cases, misuse of information could happen in two ways. One of the ways would be misrepresenting or mixing fake documents in a large dump of real documents. Due to the quite accurate nature of the real documents leaked, the audience would often fall into a state similar to the hot hand fallacy principle and believe all documents to be real. This is what happened in the case of the attack and leak of World Anti-Doping Agency data during the cyberattack by a Russian hacker collective called Fancy Bear. This hacker group was agitated as there were talks of barring Russian athletes from the Olympic games due to the doping incidents. Thus, they leaked the test documents of several athletes who had received therapeutic use exemptions, including gymnast Simone Biles, tennis players Venus and Serena Williams and basketball player Elena Delle Donne. Thus, such athletes were portrayed in the wrong light and Russia was portrayed as the victim. This was later proven to be false.

The other method of manipulation is Narrative Laundering. This method is when a story is made up out of the real documents. The story is often misleading or even fake and generally uses certain specific facts from the documents to prove its authenticity. An example of such incident would be if only a certain excerpt was considered from a medical paper and the treatment was provided using that method. Thus, even if the original paper would’ve said later on that this particular method in the excerpt is not recommended, the excerpt signifies something else and thus, misleads the audience who are fed this laundered story. Even generalisation of facts taken from similar medical papers, which have experimented only on a certain sub-group of population and not the whole world leads to improper practices and may cause fatal results.

Virtual Content, Real Influence

While looking at social media content, we often find out based on the earlier case studies that these systems promote and incentivise disinformation. Since we don’t really live in an Orwellian world right now, it would be safe to say that this is a design flaw and isn’t done purposefully.

- Social Media Networks are designed in such a way that instant feedback (quantified in the form of # of likes) is instantly rewarded.

- Consuming more content leads to the algorithm assuming satisfaction in that particular category and thus, recommending content without further verification or a general feedback loop to the user.

- Business models designed to increase user consumption also push these propagandas of disinformation

As it was rightly said by Renee DiResta, Mozfest 2018 @ noUpside:

“Our political conversations are happening on an infrastructure built for viral advertising.”

Such content is also used to heavily influence humans consuming the said content. Humans often drop a viewpoint when faced with extreme criticism and thus, this leads to easier manipulation of the audience when there’s already a prominent community supporting a misleading context point. This can also be observed in case of the KKK (Ku Klux Klan). When a particular neighbourhood participates in such racially discriminating practices, the people who are open-minded start receiving backlash and thus, give-in to such extremist thoughts and the use of social networks amplifies this factors as it is very easy to generate a fake “fan following” with the help of fake accounts and whatnot. This can be represented by

“Extreme viewpoints can be normalized when we think there are others around us who hold the same views”

A very good question raised during the lecture was that

“If Big Tech companies build effective tools to detect such fake accounts or extremist propaganda accounts and shut them down completely with the help of their superior engineering workforce, wouldn’t it lower the daily active user count significantly and would that in turn make them hesitant in taking action?”

This is indeed a very good point to think about as not every organisation is 100% evil or even 100% ethical.

We know the problems now, how can we solve them?

The Pinterest Pandemonium

Recently during the Covid19 pandemic panic, there was a huge fallout regarding the authenticity of vaccine and the people identified as anti-vaxxers were dubious about what was in the vaccines as they were created rather quickly according to them. This led to such groups relying on the mighty social media articles, the utmost “reliable” sources of information for these vaccines. In order to prevent the spread of disinformation, Pinterest implemented a feature in which any such vaccine query would be redirected automatically to verified sources such as CDC, WHO, etc.

However, this didn’t go as expected. The redirection led to the anti-vaxxers becoming more paranoid and believing that the vaccines did have something to hide which the “higher-ups” didn’t want them to know. Also, this revealed that such authoritarian control was possible on such social media networks to the extent that content search was completely manipulated (even if it was done for good). Thus, giving authoritative power to social networks is definitely not the solution to ending disinformation and infact, this could be the factor which might increase dissent among the users.

The Ctrl + F solution

Instead of looking at the intricate faults of such humongous networks, the users could increase the digital literacy among themselves. The ultimate verification of any article or any digital content for that matter can be carried out by the people consuming them in a sheer duration of 30 seconds. The approach to do this was proposed by Mike Caulfield in Check, Please!. This approach is often considered to be better than researching various other websites and finding more misleading information on the same topic. This course could be used as an exhaustive method to verify doubtful content and thus, decreasing the disinformation being spread among the people.

A point can be raised that since the approach is that simple, why is it not automated and built into the networks themselves. Although, it is true that the approach is simple but it is very context specific and it would be better if the users run the test themselves instead of scanning the content. Though scanning the content would be considered okay on public platforms such as Twitter but it isn’t accepted on end-to-end encrypted platforms like Whatsapp as this would lead to a possible leak in the sensitive messages and thus, the app would lose its crucial usability feature.

Large Scale Issues

On a large scale level, even information-centric platforms like Wikipedia have appointed fact-checkers who verify the content posted. However, on observing the revision history on such platforms, one can observe that there is an arms race going on between the disinformationists and the fact-checkers. Thus, it is the responsibility of the tech companies as well as the consumers to fight this serious problem and prevent critical circumstances such as the Brazil Election Issue.

Thanks for reading! Hope you liked it :)